Australia stands at an inflection point in AI adoption, driven by goals to enhance productivity, strengthen cybersecurity, and increase revenue. Organisations across Australia continue to embed AI technologies into their operations, may it be through automating routine work or processing huge amounts of data.

But as AI adoption accelerates, so do the risks—ranging from biased decision-making and privacy breaches to lack of transparency and job displacement. Such challenges highlight the urgent need for responsible, well-governed AI implementation.

Read on to learn about the current state of the AI Australia ecosystem, the key AI ethics principles, and more.

The State of AI Governance in Australia

As evident as it is, Australia’s AI ecosystem is advancing rapidly—economically, politically, and technologically. According to the Commonwealth Scientific and Industrial Research Organisation (CSIRO), the Australian Artificial Intelligence (AI) market is projected to be worth $315 billion by 2028.

In terms of adoption rates, Export Finance Australia reported that 68% of Australian businesses have already integrated AI into their operations. In a 2024 study by the National AI Centre (NAIC), 35% of small and medium businesses across Australia are adopting AI. Industries with the highest uptake include health and education (45%), manufacturing (45%), services (39%), and retail trade (38%).

Additionally, the economic and revenue benefits of AI adoption are becoming clear. In the recent PwC Australia’s 2025 AI Jobs Barometer, data shows the industries most exposed to AI experienced a threefold increase in revenue per employee (27%). Also, nine in ten CEOs believed that adopting AI is crucial to achieving business strategy in the next three to five years, according to PwC’s 28th Annual Global CEO Survey.

A 2024 report by Microsoft also reveals that Australian-based applications companies and global firms with Australian operations will generate $10.6B in annual revenue by 2035. Microsoft Australia’s Managing Director, Steven Worrall, said, “Australia has a solid foundation for AI, with its favourable business environment, strong sustainability credentials and high level of AI readiness all being among the country’s key strengths.”

Looking ahead, Australia’s AI outlook remains cautiously optimistic. Australia ranked 7th in ‘responsible AI’ and 16th in ‘AI vibrancy’ in the 2024 Stanford Global AI Power Rankings, despite its lag in ‘economic competitiveness’. To increase its pace in AI development, the Australian Government announced its plan to develop a national AI strategy, focusing on building AI skills, sovereign capabilities, and infrastructure. This new AI capability plan is expected to be released in late 2025, aiming to improve the state of AI governance in Australia.

What to Know About the Australia AI Ethics Principles

AI ethics is the multidisciplinary field centred on the moral practices or principles that guide the development and implementation of AI—aligning with human values and societal good. This critically dictates how such systems deeply impact human decisions in areas such as healthcare, education, finance, and so on. To put it simply, AI ethics is the code of conduct for the responsible creation and deployment of AI systems.

In response to growing concerns about fairness and safety in AI use, the Australian Government introduced eight AI ethics principles. These principles aim to guide organisations in developing and deploying AI systems that are transparent and secure.

While applying such principles is entirely voluntary, Australian businesses are encouraged to implement them. The 8 AI Ethics Australia Principles include:

1. Human, societal, and environmental wellbeing

The first principle emphasises that AI Australia systems should benefit individuals, society, and the environment. It indicates that the purpose of AI should be identified and justified from the start. Systems that address global concerns, like those outlined in the UN’s Sustainable Development Goals, are particularly encouraged. Both positive and negative impacts of AI must also be accounted for throughout its entire lifecycle, including impacts on external stakeholders.

2. Human-centred values

AI systems should respect human rights, diversity, and the autonomy of individuals. It must work for people—not control, manipulate, or erode what makes us human. These are not abstract ideals but practical guardrails against potential harms, may it be subtle bias or outright democratic disruption. Interference with certain human rights is allowed only when it is reasonable, necessary, and proportionate. Individuals interacting with AI must maintain full and effective control, whereas AI should only augment human cognitive, social, and cultural skills.

3. Fairness

Fairness in AI is a non-negotiable standard. The third principle clearly states that AI systems must be inclusive and accessible, and free from unfair discrimination against individuals, communities, or groups. Their development should centre on user experience and equitable access, considering the meaningful input of those who’ll be affected. Without proper measures, AI can encode bias into decisions and perpetuate societal injustices against vulnerable groups, whether by age, disability, race, sex, or gender identity.

4. Privacy protection and security

The fourth principle states AI systems must respect and uphold privacy rights and data protection, and ensure data security. Throughout an AI system’s lifecycle, data governance and management should be observed. This involves data collection, anonymisation, storing and processing. Proper security measures should also be in place, and address unintended or inappropriate use of AI systems. These may include end-to-end encryption, multi-factor authentication for system access, and regular penetration testing, which helps reduce data breaches or misuse.

5. Reliability and safety

AI Australia systems should reliably operate as what they’re intended to do, nothing less. Therefore, making sure that AI is reliable, accurate, and when needed, produces repeatable results. Continuous monitoring and testing are necessary to ensure the system meets its intended purpose. Ongoing risk management, like periodic audits and bias detection, must also be integrated into the AI lifecycle to proactively address issues. At the same time, clear accountability should be established, identifying who is responsible for making sure an AI system is reliable and safe.

6. Transparency and explainability

The sixth AI ethics Australia principle states that there must be transparency and responsible disclosure so people know when AI impacts or interacts with them. Responsible disclosure must be provided without delay to each stakeholder group, including users, creators, operators, accident investigators, regulators, legal professionals, and the public.

7. Contestability

When AI significantly impacts an individual, community, or the environment, there should be a timely process to allow users to challenge its use or outcomes. What qualifies as ‘significant’ will vary by the AI system’s context, impact, and application. Where rights are involved, contestability must also include proper oversight and a clear role for human judgment.

8. Accountability

Every AI system has a chain of human decisions behind it. The last of the Australia AI ethics principles emphasises that those responsible for the AI system’s phases must be identifiable and accountable for its outcomes too, and human oversight must be present. From the system’s design, and development, to operation, organisations and individuals should take ownership of outcomes, including unintended ones. The level of human control required will vary by use case, but it must always be considered deliberately.

How Boards Can Balance AI Innovation and Ethics

For AI to deliver real value, Australian boards should develop the right strategies to use it effectively and responsibly. Integrating AI meaningfully requires a rethink of business processes to completely unlock its potential. But how can Australian boards ensure balance in AI innovation and ethics?

1. Adopt a human-centric approach

Embedding human-centricity is not about vague empathy—it means mandating design processes that explicitly map how each AI use case impacts human autonomy, fairness, and rights. Boards should require Human Impact Assessments (HIAs) alongside financial business cases for AI initiatives.

These HIAs must examine not only user-facing interactions but also internal applications (e.g., employee performance monitoring tools or automated hiring systems). Create a standing AI ethics subcommittee with input from experts in human rights, behavioural science, and regulatory law to ensure the human dimension is never an afterthought.

2. Build scenario-based AI risk governance

Traditional risk registers are static—unsuited for AI, where system behaviour can change with input data, model drift, or context. Boards should demand dynamic, scenario-based risk modelling that accounts for AI-specific threats: adversarial inputs, data poisoning, unexplainable outputs, and bias propagation.

Such risks must be stress-tested against the company’s current and projected business models. For example, what if your AI-driven underwriting tool inadvertently breaches discrimination laws during a market expansion? Boards need mechanisms to simulate those scenarios in advance.

3. Require model lifecycle transparency

Board oversight should not stop at deployment approvals. Require all AI initiatives to include an end-to-end model lifecycle documentation plan—from training data sourcing and versioning, through model retraining thresholds, to decommissioning protocols. Insist on lineage tracking and audit logs that are intelligible to third-party assessors.

These logs should include not only model performance metrics but decision rationale trails (especially for high-impact systems), updated in real time or per retrain cycle. This enables both internal accountability and external audit readiness under Australian and international regulatory scrutiny.

4. Align AI KPIs with ethical metrics

Boards typically focus on innovation KPIs like speed of deployment, ROI, and market share gain. But for AI, boards must demand dual metrics: business and ethical.

Require reporting on bias mitigation success rate, percentage of AI decisions reversed via human review, and explainability compliance scores for each model in production. These metrics should be weighed and integrated into board performance evaluations. Linking ethics directly to measurable outcomes shifts responsible AI from policy rhetoric to operational discipline.

5. Establish external-facing AI ethics review panel

For systems with the potential to impact rights, safety, or vulnerable groups, boards should mandate an independent ethics review. These panels can include external experts in law, technology, consumer advocacy, and social impact.

Make their assessments publicly available, within confidentiality limits. This builds not only trust, but also pressure-tests your practices against community and legal expectations—particularly under Australia’s emerging AI regulatory frameworks like the Privacy Act 1988 (currently under review).

6. Prioritise AI incident response

Boards must treat AI failure as inevitable, not exceptional. Develop an AI-specific incident response framework aligned with broader cyber and operational risk systems. This includes thresholds for what constitutes an AI incident (e.g. unexplained outputs, deviation from safety parameters, discriminatory outcomes) and pre-defined escalation chains.

Require designated “model owners” for each deployed system. At the same time, ensure board visibility into model status dashboards, especially for systems affecting financial, reputational, or legal exposure. Just as cybersecurity boards now review threat intelligence reports, AI systems must have parallel reporting structures.

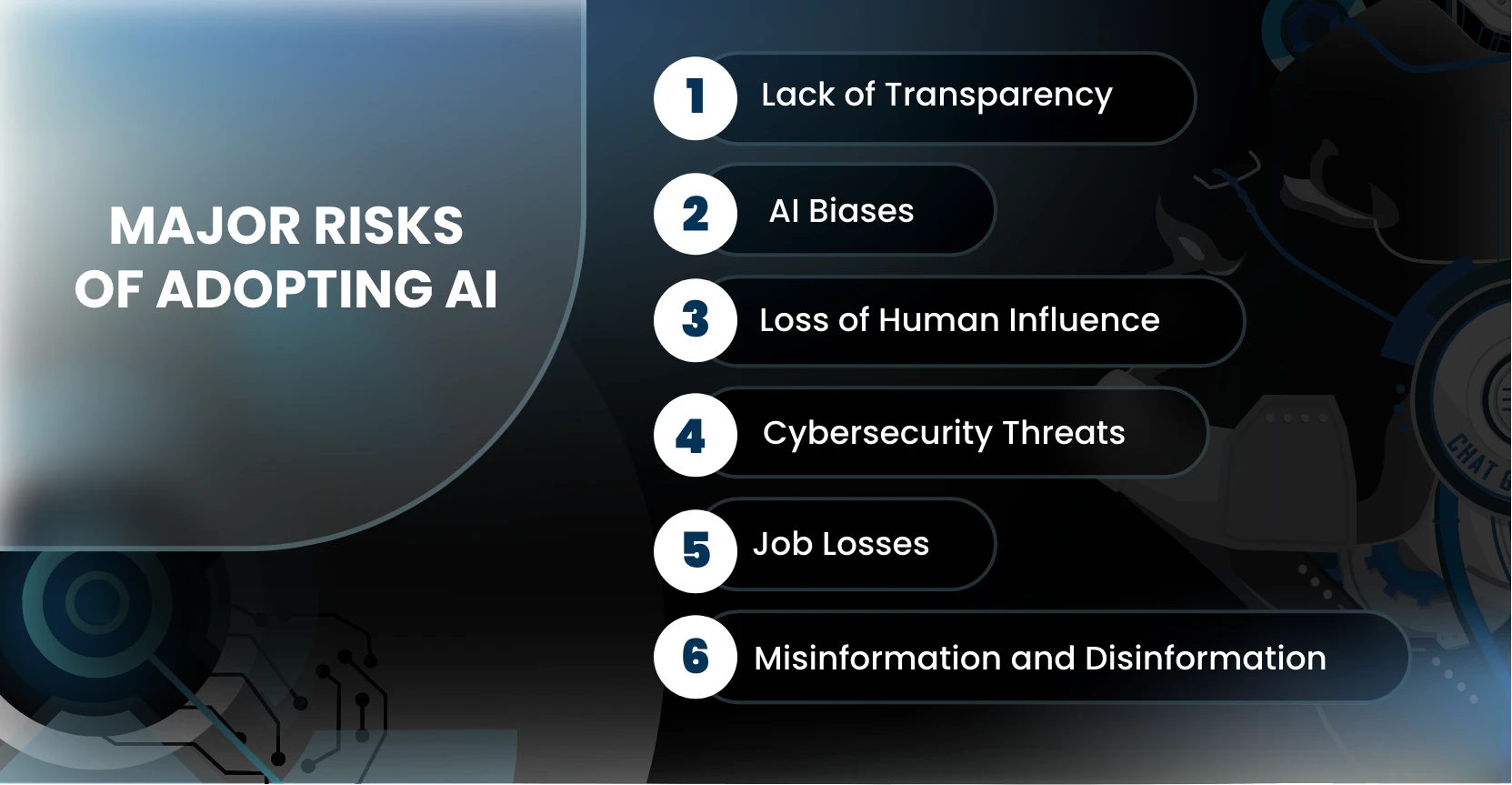

Major Risks of Adopting AI Australia: How to Take Action

The adoption of AI technologies, if not done right, can bring about risks for businesses. Listed here are the six biggest risks when adopting AI, and what actions you can take to prevent or mitigate them.

1. Lack of Transparency

AI models and algorithms can be overly complex and tough to comprehend. Their complexity often leads to challenges regarding AI’s reasoning, leading to a lack of explanation on what data its algorithms use or why its decisions might be biased or unsafe. Such opaqueness erodes trust in AI and hides its potential dangers, making it hard to act upon them.

Actions to take:

- Institutionalise explainable AI tools: Require the use of XAI frameworks (e.g., LIME, SHAP, model cards), which are tools and methods designed to make AI decision-making processes more understandable to humans. This is critical for ensuring transparency, fairness, and identifying potential biases in AI systems.

- Deploy structured model documentation: Mandate “model cards” capturing data sources, performance metrics, bias evaluations, decision thresholds, and retraining logs—to empower internal and external audits.

2. AI Biases

Bias in AI is not a bug, it’s often a reflection of the environment it is trained on. Machine learning models learn from historical data. Hence, they can inherit or amplify societal inequities encoded in such data. Whether it’s skewed labelling, unbalanced datasets, or biased feature selection, these problems can creep into the algorithms.

Actions to take:

- Automate fairness testing: Integrate bias tools like IBM Fairness 360 or Google What‑If Tool into CI pipelines to detect representation, measurement, and emergent biases across intersecting attributes.

- Curate demographically balanced datasets: Enforce data sampling rules that ensure training data covers protected classes uniformly; require pre-launch disparity metrics tracked per cohort.

3. Loss of Human Influence

A risk of eroding essential human capacities—judgment, empathy, creativity, and social connection—can occur as AI systems take on increasingly complex roles. Persistent interaction with AI instead of people, such as through chatbots or virtual assistants, can also subtly displace peer engagement and diminish social skills.

Actions to take:

- Mandate human‑in‑the‑loop controls: Define categorical boundaries where AI recommendations trigger mandatory human review, especially for appeals, policy overrides, or rights-sensitive domains.

- Quantify override rates: Require logging of every human override vs AI decision; incorporate this rate into performance dashboards as an indicator of system reliability and trustworthiness.

4. Cybersecurity Threats

AI systems are quickly becoming high-value targets and high-risk assets. Cyber attackers now use generative tools to create phishing content, mimic human voices, and automate fraud identification without lifting a finger.

Actions to take:

- Implement secure‑by‑design development: Apply threat modelling for the entire AI lifecycle—validate data integrity, enforce access controls, and deploy adversarial‑resistant architectures.

- Run adversarial red‑team exercises: Task specialised teams with injecting gradient‑based attacks (FGSM, PGD, patch attacks) to stress‑test system resilience regularly.

5. Job Losses

Besides creating new roles, AI is also reshaping the workforce, but by making others obsolete. While demand for AI-related specialists rises, roles rooted in routine are increasingly at risk. The real challenge is not whether AI will replace jobs, but whether businesses can adapt fast enough to reskill and redeploy people in time.

Actions to take:

- Launch structured reskilling programs: Mandate annual upskilling targets and deploy AI use‑case incubators where employees collaboratively integrate AI into their workflows, measuring success by augmentation, not elimination.

- Track workforce impact metrics: Board dashboards should include “AI augmentation ratio” — the number of staff with roles enhanced by AI vs displaced—to ensure alignment with human-value strategies.

6. Misinformation and Disinformation

AI has lowered the barrier for producing falsehoods at scale. From AI-generated robocalls to all sorts of deepfakes, malicious actors use generative tools to manipulate opinion and blur the line between truth and fabrication. Deepfakes spread rapidly across social platforms, damaging reputations and opening new fronts for extortion.

Actions to take:

- Embed provenance tracking technology: Require watermarking, cryptographic signatures, or AI‑generated content markers to authenticate and trace all external gen-AI outputs tied to the enterprise.

- Monitor deployed models: Actively scan for misuse of your generative models via API endpoints—trigger alerts and key revocation if outputs are used to create deepfakes or misleading content.

Convene: Your Secure Hub for AI Risk Oversight and Governance

As AI adoption accelerates, Australian boards are under pressure to oversee not just innovation but its ethical implications. From opaque algorithmic decisions to data privacy bias, the boardroom is becoming a frontline for AI-related risks. However, many boards still lack the time and tools to manage such challenges with confidence.

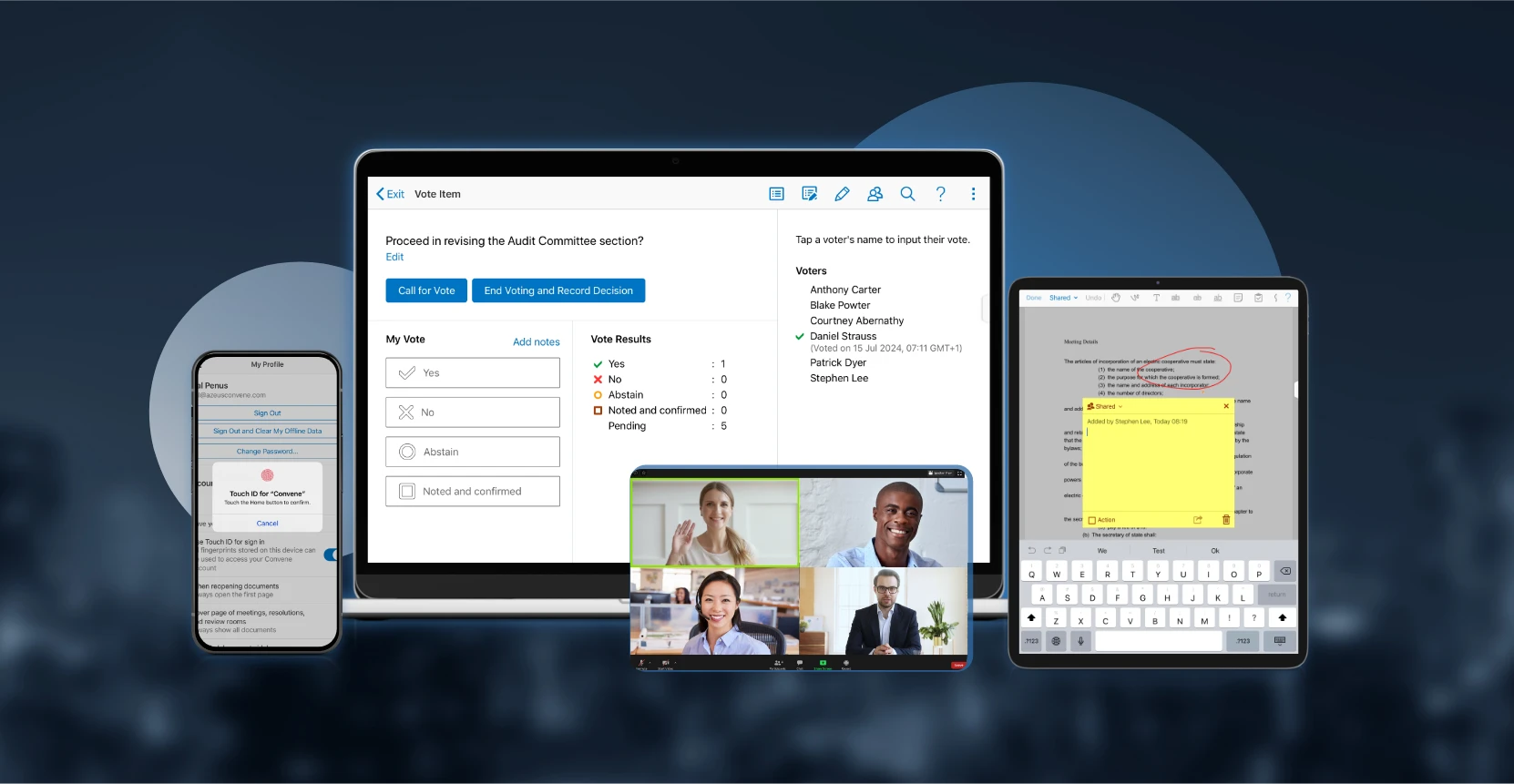

Convene, a globally recognised board management software, provides a secure, centralised environment for board meetings, document collaboration, decision tracking, and governance reporting. Compliant with ISO 27001, SOC 2, and other global standards, Convene is purpose-built for boards navigating high-stakes risks and decisions.

Now, with the launch of Convene AI, Australian boards can elevate that artificial intelligence governance experience even further. With features like an intelligent meeting assistant, automated minute-taking, and AI-powered action tracking, directors can collaborate and ensure no decision falls through the cracks. All this runs inside a secure AWS environment with enterprise-grade privacy safeguards, ensuring your board remains fast, informed, and fully in control.

Request a demo today and learn more about Convene.

Jielynne is a Content Marketing Writer at Convene. With over six years of professional writing experience, she has worked with several SEO and digital marketing agencies, both local and international. She strives in crafting clear marketing copies and creative content for various platforms of Convene, such as the website and social media. Jielynne displays a decided lack of knowledge about football and calculus, but proudly aces in literary arts and corporate governance.